前言

Skip!

Skip!!

Skip!!!

模型下载

1

| git clone https://huggingface.co/OrionStarAI/Orion-14B-Chat-Plugin OrionStarAI/Orion-14B-Chat-Plugin

|

安装依赖

Orion 需要引用 flash-attn- 要提前安装好

CUDA Toolkit

安装前依赖

- 安装

ninja

1

| python3 -m pip install ninja

|

- 安装

packaging

1

| python3 -m pip install packaging

|

- 安装

torch

1

| python3 -m pip install torch

|

- 安装

numpy

1

| python3 -m pip install numpy

|

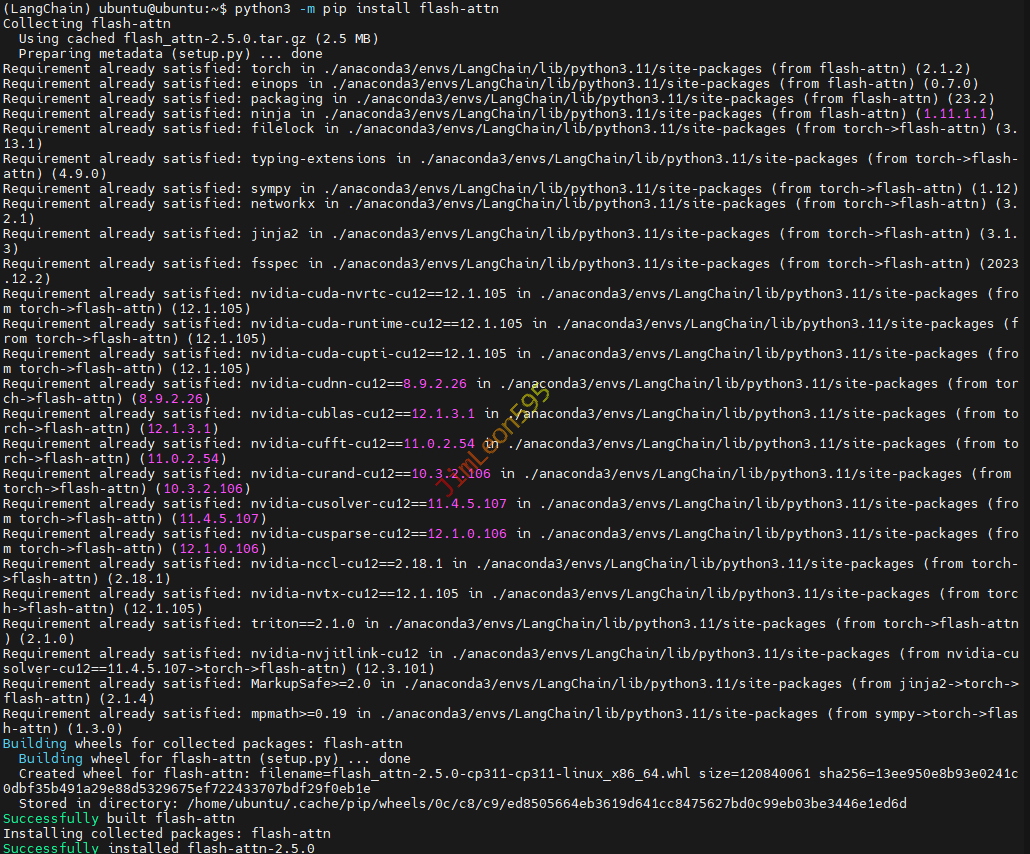

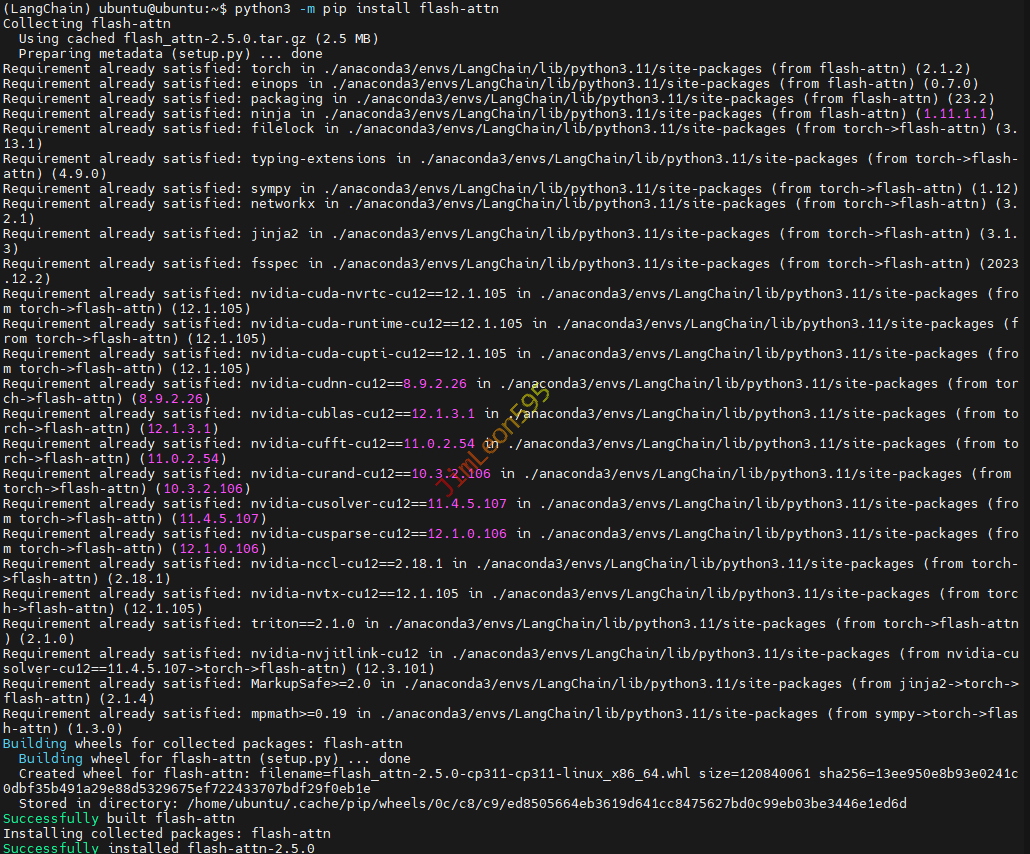

安装 flash-attn

1

| python3 -m pip install flash-attn

|

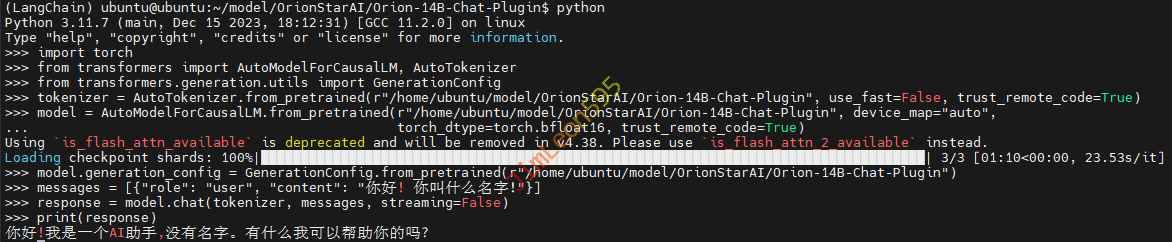

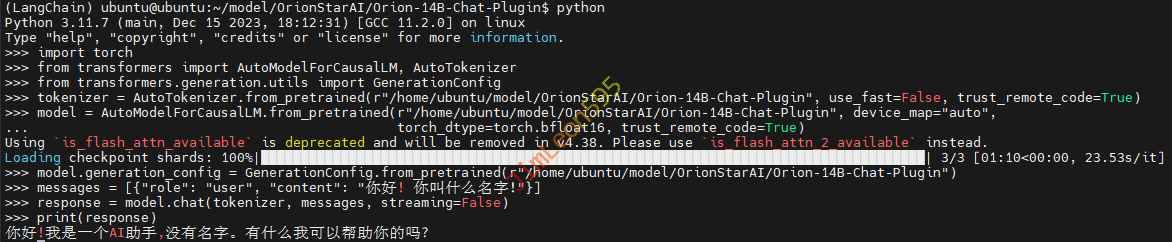

Pyhton 直接推理

- 进入

Python 环境

- 逐条输入命令

1

2

3

4

5

6

7

8

9

10

11

12

| import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

from transformers.generation.utils import GenerationConfig

tokenizer = AutoTokenizer.from_pretrained(r"/home/ubuntu/model/OrionStarAI/Orion-14B-Chat-Plugin", use_fast=False, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(r"/home/ubuntu/model/OrionStarAI/Orion-14B-Chat-Plugin", device_map="auto",

torch_dtype=torch.bfloat16, trust_remote_code=True)

model.generation_config = GenerationConfig.from_pretrained(r"/home/ubuntu/model/OrionStarAI/Orion-14B-Chat-Plugin")

messages = [{"role": "user", "content": "你好! 你叫什么名字!"}]

response = model.chat(tokenizer, messages, streaming=False)

print(response)

|

增加依赖

1

2

| python-dotenv==1.0.0

openpyxl==3.1.2

|

参考 & 引用

https://github.com/chatchat-space/Langchain-Chatchat/wiki/支持列表#llm-模型支持列表