1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

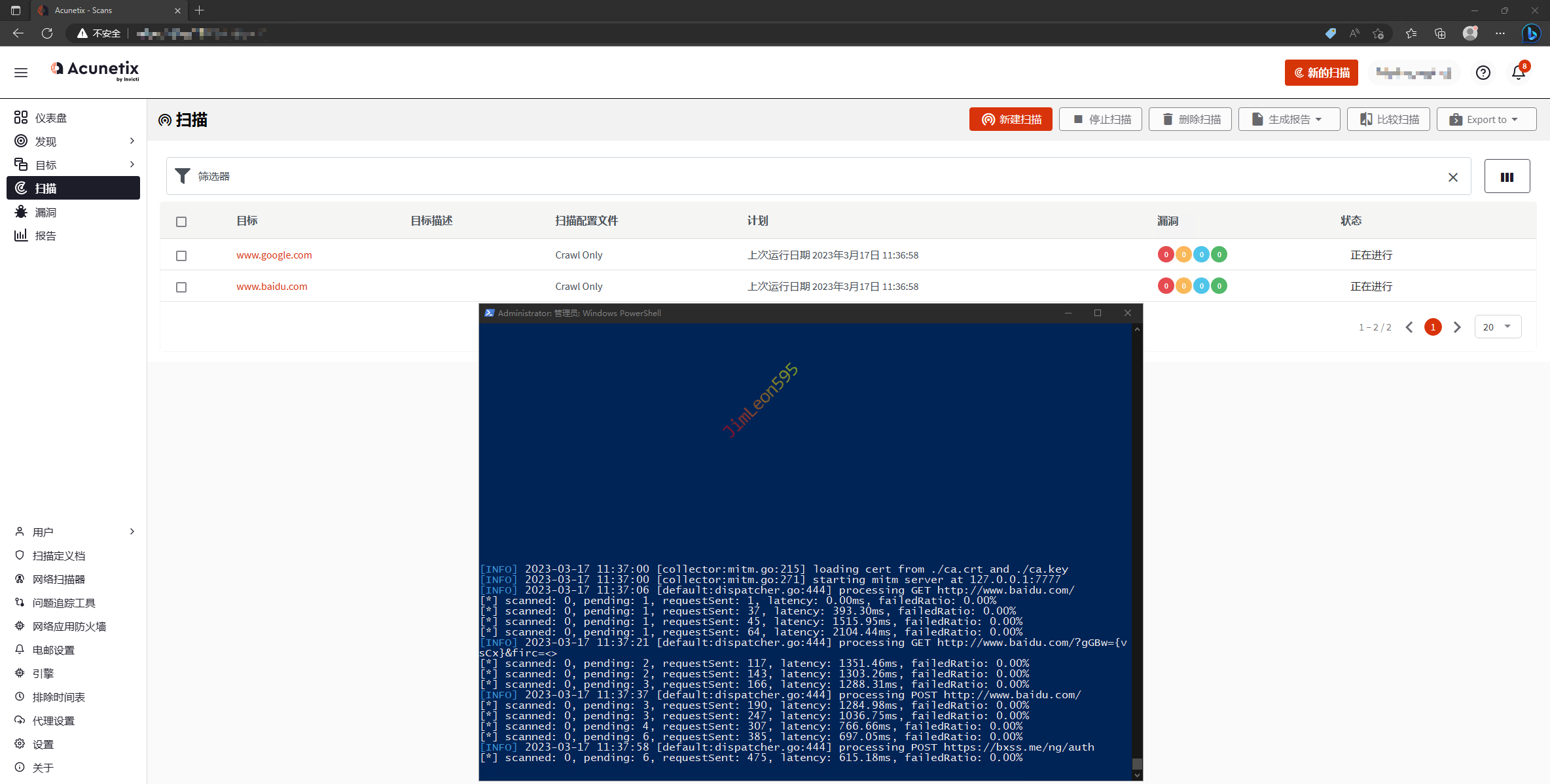

| import requests

import json

import urllib3.packages

from urllib3.exceptions import InsecureRequestWarning

urllib3.disable_warnings(InsecureRequestWarning)

apikey = '**************************************************************'

headers = {'Content-Type': 'application/json',"X-Auth": apikey}

def addTask(url,target):

try:

url = ''.join((url, '/api/v1/targets/add'))

data = {"targets":[{"address": target,"description":""}],"groups":[]}

r = requests.post(url, headers=headers, data=json.dumps(data), timeout=30, verify=False)

result = json.loads(r.content.decode())

return result['targets'][0]['target_id']

except Exception as e:

return e

def scan(url,target,Crawl,user_agent,profile_id,proxy_address,proxy_port):

scanUrl = ''.join((url, '/api/v1/scans'))

target_id = addTask(url,target)

if target_id:

data = {"target_id": target_id, "profile_id": profile_id, "incremental": False, "schedule": {"disable": False, "start_date": None, "time_sensitive": False}}

try:

configuration(url,target_id,proxy_address,proxy_port,Crawl,user_agent)

response = requests.post(scanUrl, data=json.dumps(data), headers=headers, timeout=30, verify=False)

result = json.loads(response.content)

return result['target_id']

except Exception as e:

print(e)

def configuration(url,target_id,proxy_address,proxy_port,Crawl,user_agent):

configuration_url = ''.join((url,'/api/v1/targets/{0}/configuration'.format(target_id)))

data = {"scan_speed":"sequential","login":{"kind":"none"},"ssh_credentials":{"kind":"none"},"sensor": False,"user_agent": user_agent,"case_sensitive":"auto","limit_crawler_scope": True,"excluded_paths":[],"authentication":{"enabled": False},"proxy":{"enabled": Crawl,"protocol":"http","address":proxy_address,"port":proxy_port},"technologies":[],"custom_headers":[],"custom_cookies":[],"debug":False,"client_certificate_password":"","issue_tracker_id":"","excluded_hours_id":""}

r = requests.patch(url=configuration_url,data=json.dumps(data), headers=headers, timeout=30, verify=False)

def main():

Crawl = True

proxy_address = '127.0.0.1'

proxy_port = '7777'

awvs_url = 'https://127.0.0.1:3443'

with open(r'C:\Users\Administrator\Desktop\ScanURL.txt','r',encoding='utf-8') as f:

targets = f.readlines()

profile_id = "11111111-1111-1111-1111-111111111111"

user_agent = "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.21 (KHTML, like Gecko) Chrome/41.0.2228.0 Safari/537.21"

if Crawl:

profile_id = "11111111-1111-1111-1111-111111111117"

for target in targets:

target = target.strip()

if scan(awvs_url,target,Crawl,user_agent,profile_id,proxy_address,int(proxy_port)):

print("{0} 添加成功".format(target))

if __name__ == '__main__':

main()

|